Machine learning, fueled by frameworks like PyTorch and Scikit-learn, is rapidly evolving, offering powerful tools for data analysis and predictive modeling.

PyTorch excels in dynamic computation and Scikit-learn provides streamlined algorithms, creating a robust ecosystem for both research and practical applications.

Resources, including PDFs, detail these frameworks, aiding developers in mastering techniques like GANs and optimization methods, even with limited hardware.

Overview of Machine Learning

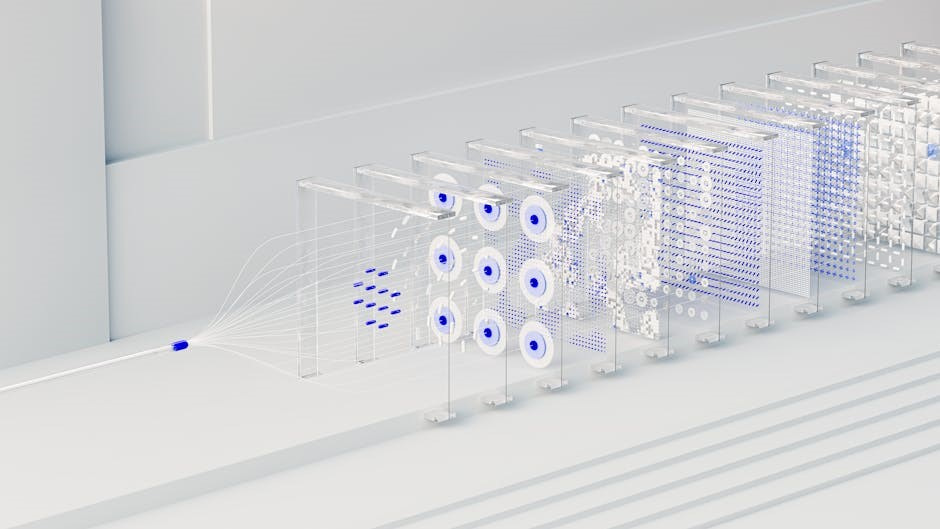

Machine Learning (ML) encompasses algorithms enabling systems to learn from data without explicit programming. This field broadly divides into supervised, unsupervised, and reinforcement learning, each suited for different tasks. PyTorch and Scikit-learn are pivotal tools, though differing in approach.

Scikit-learn shines in traditional ML tasks – classification, regression, clustering – offering a user-friendly interface and efficient algorithms. It’s ideal for quick prototyping and deployment. Conversely, PyTorch, a Python-based framework, excels in deep learning, providing flexibility through dynamic computation graphs.

PDF resources often highlight PyTorch’s strength in areas like computer vision and natural language processing, where complex neural networks are essential. The synergy between both libraries is powerful; Scikit-learn handles preprocessing, while PyTorch builds and trains sophisticated models. Understanding both is crucial for a comprehensive ML skillset, as evidenced by growing adoption in both academic and industrial settings.

The Synergy Between PyTorch and Scikit-learn

PyTorch and Scikit-learn, while distinct, demonstrate remarkable synergy in a machine learning workflow. Scikit-learn excels at data preprocessing – scaling, feature selection, dimensionality reduction – preparing data for more complex models. This preprocessed data can then seamlessly feed into PyTorch for deep learning tasks.

PyTorch models, once trained, can even be integrated into Scikit-learn pipelines for streamlined model evaluation and deployment. This allows leveraging Scikit-learn’s robust cross-validation and grid search capabilities. PDF guides frequently illustrate this integration, showcasing how to combine the strengths of both frameworks.

Furthermore, PyTorch’s pre-trained models facilitate transfer learning, where knowledge gained from one task is applied to another, often enhanced by Scikit-learn’s fine-tuning options. This collaborative approach maximizes efficiency and performance, making them a powerful duo for diverse ML projects.

Setting Up Your Environment

Establishing a suitable environment is crucial; install PyTorch and Scikit-learn using pip, referencing official PDF guides for version compatibility and GPU configuration.

Installing PyTorch

PyTorch installation involves selecting the appropriate version based on your system and CUDA availability. The official PyTorch website provides a tailored installation command, simplifying the process; Utilizing pip is the recommended method, ensuring compatibility and ease of updates.

PDF guides often detail specific steps for various operating systems, addressing potential installation issues. For GPU support, verify CUDA and cuDNN versions align with the chosen PyTorch build. Troubleshooting common errors, such as those encountered during CUDA setup, is frequently covered in online resources and community forums.

Ensure you activate your virtual environment before installation to avoid conflicts with other Python packages. Post-installation, verify the installation by importing PyTorch and checking the CUDA availability within your Python script. Detailed installation instructions, including those for low-version PyTorch and CUDA combinations, are readily available online.

Installing Scikit-learn

Scikit-learn, a cornerstone of traditional machine learning in Python, boasts a straightforward installation process primarily through pip. Activating a virtual environment is highly recommended to maintain project isolation and avoid dependency conflicts.

PDF documentation and online tutorials consistently highlight pip as the preferred installation method: pip install scikit-learn. This command automatically resolves and installs necessary dependencies. Verification is simple – import the library in a Python interpreter and check the version.

Unlike PyTorch, Scikit-learn generally doesn’t require GPU-specific installations, making it accessible on a wider range of systems. However, ensure your Python and pip are up-to-date for optimal compatibility. Comprehensive installation guides, often available as downloadable PDFs, address potential issues and provide detailed troubleshooting steps.

CUDA and GPU Configuration for PyTorch

Leveraging a GPU significantly accelerates PyTorch computations, particularly for deep learning models. However, proper CUDA configuration is crucial. This involves installing the correct NVIDIA drivers, the CUDA toolkit, and a CUDA-enabled version of PyTorch.

PDF guides often emphasize compatibility between PyTorch versions and CUDA toolkit versions (e.g., PyTorch 1.10.0 with CUDA 11.3). The PyTorch website provides specific installation commands based on your system configuration. Using pip with the appropriate flags (e.g., pip install torch torchvision torchaudio --index-url https://download;pytorch.org/whl/cu118) ensures GPU support.

Troubleshooting often involves verifying driver versions and ensuring the CUDA path is correctly set. Numerous online resources and downloadable PDFs detail these steps, offering solutions for common installation problems and version conflicts.

Fundamentals of PyTorch

PyTorch’s core lies in Tensors, enabling efficient numerical operations and Autograd for automatic differentiation. Neural Networks are built using nn.Module.

PDF resources detail these concepts, aiding in understanding dynamic computation graphs and gradient descent optimization techniques.

Tensors and Operations

Tensors are the fundamental data structures in PyTorch, analogous to NumPy arrays but with the added benefit of GPU acceleration. They can represent scalars, vectors, matrices, and higher-dimensional arrays, forming the basis for all numerical computations within the framework.

PyTorch provides a rich set of operations for manipulating tensors, including arithmetic operations (+, -, *, /), matrix multiplication (torch.matmul), reshaping (torch.reshape), and slicing. These operations are designed to be efficient and seamlessly integrated with the Autograd engine for automatic differentiation.

Understanding tensor operations is crucial for building and training neural networks. PDF guides often demonstrate these operations with practical examples, illustrating how to perform common tasks like data preprocessing, feature engineering, and model evaluation. Mastering tensors unlocks the full potential of PyTorch for machine learning tasks.

Furthermore, PyTorch supports various tensor data types (e.g., float32, float64, int64) to optimize memory usage and computational performance.

Autograd and Gradient Descent

Autograd is PyTorch’s automatic differentiation engine, a cornerstone of deep learning. It tracks all operations performed on tensors with gradients enabled, allowing for the efficient computation of derivatives – essential for training neural networks via gradient descent.

Gradient descent iteratively adjusts model parameters to minimize a loss function. PyTorch’s Autograd calculates the gradients of the loss with respect to each parameter, guiding the optimization process. This eliminates the need for manual derivative calculations, simplifying model development.

PDF resources dedicated to PyTorch often detail Autograd’s functionality, showcasing how to use it with optimizers like SGD and Adam. Understanding Autograd is vital for customizing training loops and implementing advanced optimization techniques. It’s a key component for building effective machine learning models.

The requires_grad=True flag is crucial for enabling gradient tracking on tensors.

Building Neural Networks with `nn.Module`

`nn.Module` is PyTorch’s fundamental building block for creating neural networks. It serves as the base class for all neural network modules, enabling a structured and organized approach to model construction. Defining a network involves subclassing `nn.Module` and implementing the __init__ method to define layers, and the forward method to specify the computation flow.

Layers like linear transformations (nn.Linear), convolutional layers (nn.Conv2d), and activation functions (nn.ReLU) are readily available within nn. These layers are then combined within the forward pass to define the network’s behavior.

PDF tutorials often demonstrate building networks with `nn.Module`, showcasing sequential models and more complex architectures. Mastering `nn.Module` is essential for creating custom neural networks tailored to specific machine learning tasks.

Properly defining the forward pass is crucial for correct computation.

Scikit-learn for Traditional Machine Learning

Scikit-learn provides efficient tools for supervised and unsupervised learning, model evaluation, and data preprocessing, complementing PyTorch’s deep learning capabilities.

PDF guides detail Scikit-learn’s algorithms, offering practical examples for classification, regression, and clustering tasks.

Supervised Learning with Scikit-learn

Scikit-learn shines in supervised learning, offering a comprehensive suite of algorithms for both classification and regression tasks. These algorithms, readily available and well-documented, empower developers to build predictive models from labeled datasets.

Classification algorithms like Logistic Regression, Support Vector Machines (SVMs), and Decision Trees are easily implemented, allowing for categorization of data points. Regression algorithms, including Linear Regression, Ridge Regression, and Random Forest Regressor, predict continuous values.

PDF resources and tutorials demonstrate practical applications, guiding users through data preparation, model training, and performance evaluation. Scikit-learn’s consistent API simplifies experimentation and model selection. The library’s focus on usability makes it an excellent choice for rapid prototyping and deployment of supervised learning solutions, often integrated with PyTorch for complex tasks.

Furthermore, Scikit-learn provides tools for cross-validation and hyperparameter tuning, ensuring robust and generalizable models.

Unsupervised Learning with Scikit-learn

Scikit-learn provides a robust toolkit for unsupervised learning, enabling the discovery of hidden patterns and structures within unlabeled data. Algorithms like K-Means clustering, Principal Component Analysis (PCA), and DBSCAN are readily available for dimensionality reduction and grouping similar data points.

PCA simplifies datasets by identifying principal components, while K-Means partitions data into distinct clusters based on proximity. DBSCAN excels at identifying clusters of varying shapes and densities, even in noisy datasets.

PDF guides and tutorials illustrate how to apply these techniques to real-world problems, such as customer segmentation and anomaly detection. Scikit-learn’s intuitive interface simplifies the process of exploring and understanding complex datasets. These methods often complement PyTorch-based models for feature extraction or data preprocessing, enhancing overall model performance.

The library also offers tools for evaluating clustering performance and visualizing results.

Model Evaluation and Selection

Scikit-learn offers comprehensive tools for rigorously evaluating machine learning models, crucial for ensuring reliable performance. Techniques like cross-validation, utilizing K-fold cross-validation, provide robust estimates of generalization accuracy, preventing overfitting. Metrics such as accuracy, precision, recall, F1-score, and ROC AUC are readily available for assessing model effectiveness.

PDF resources detail how to interpret these metrics and select the optimal model based on specific business objectives. Grid search and randomized search automate the hyperparameter tuning process, optimizing model configurations.

Furthermore, Scikit-learn facilitates model comparison, allowing data scientists to identify the best-performing algorithm for a given task. Understanding these evaluation methods is vital for building trustworthy and impactful machine learning solutions, often integrated with PyTorch models.

Combining PyTorch and Scikit-learn

Synergizing PyTorch’s flexibility with Scikit-learn’s pipelines enables powerful workflows; PDF guides demonstrate preprocessing with Scikit-learn and integrating PyTorch models seamlessly.

Using Scikit-learn for Data Preprocessing

Scikit-learn provides a comprehensive suite of tools for preparing data before feeding it into PyTorch models. This includes essential steps like scaling, normalization, and feature selection, significantly impacting model performance.

PDF resources often highlight Scikit-learn’s StandardScaler and MinMaxScaler for numerical data, while OneHotEncoder handles categorical features effectively. Utilizing these preprocessing techniques ensures data is in an optimal format for PyTorch’s tensor operations.

Furthermore, Scikit-learn’s train_test_split function is invaluable for creating training and validation sets, crucial for evaluating model generalization. Pipelines can be constructed to chain multiple preprocessing steps, streamlining the workflow and enhancing reproducibility. Properly preprocessed data leads to faster training and improved accuracy in PyTorch models.

Leveraging Scikit-learn for preprocessing allows developers to focus on model architecture and training within PyTorch, maximizing efficiency and results.

Integrating PyTorch Models into Scikit-learn Pipelines

Scikit-learn pipelines offer a streamlined approach to machine learning workflows, and PyTorch models can be seamlessly integrated into these pipelines. This allows leveraging Scikit-learn’s preprocessing, evaluation, and model selection tools alongside the flexibility of PyTorch.

PDF guides demonstrate creating custom transformers that wrap PyTorch models, enabling them to function as Scikit-learn estimators. This involves defining fit and predict methods that interface with the PyTorch model.

Benefits include simplified cross-validation, grid search, and model persistence. Pipelines enhance code organization and reproducibility. Utilizing this integration allows for a cohesive machine learning process, combining the strengths of both frameworks.

Effectively, it bridges the gap between traditional machine learning and deep learning approaches, offering a powerful and versatile solution.

Transfer Learning with Pre-trained PyTorch Models

Transfer learning significantly accelerates model development by leveraging knowledge gained from pre-trained PyTorch models. These models, often trained on massive datasets like ImageNet, provide a strong foundation for various downstream tasks.

PDF resources detail how to load pre-trained models using torchvision.models and fine-tune them on smaller, task-specific datasets. This approach reduces training time and improves performance, especially when limited labeled data is available.

Techniques include freezing earlier layers to preserve learned features and training only the final classification layers. Alternatively, unfreezing and fine-tuning all layers with a lower learning rate can further optimize performance.

Effectively, transfer learning democratizes access to powerful deep learning capabilities, enabling developers to build high-performing models with limited resources.

Advanced Topics and Resources

Exploring GANs, LSTM optimization, and meta-optimizers within PyTorch expands capabilities; PDF guides offer in-depth knowledge for complex machine learning tasks.

Generative Adversarial Networks (GANs) with PyTorch

Generative Adversarial Networks (GANs) represent a cutting-edge area within machine learning, and PyTorch provides a flexible environment for their implementation. GANs involve training two neural networks – a generator and a discriminator – in a competitive process. The generator attempts to create realistic data samples, while the discriminator tries to distinguish between real and generated data.

PyTorch’s dynamic computation graph is particularly well-suited for GANs, allowing for complex network architectures and custom training loops. Numerous PDF resources and tutorials detail the construction of GANs using PyTorch, covering various architectures like DCGANs and conditional GANs. These resources often include practical examples and code snippets, enabling developers to quickly prototype and experiment with GAN models.

Furthermore, understanding optimization techniques, such as those leveraging LSTM principles, can significantly improve GAN training stability and performance. The availability of pre-trained models and community-driven libraries further accelerates GAN development within the PyTorch ecosystem.

Optimization Techniques (LSTM, Gradient Descent)

Effective optimization is crucial for training machine learning models, and both PyTorch and Scikit-learn offer a range of techniques. Gradient Descent, the foundational algorithm, iteratively adjusts model parameters to minimize a loss function; PyTorch’s Autograd engine automates the calculation of gradients, simplifying this process.

Long Short-Term Memory (LSTM) networks, often used in sequence modeling, require specialized optimization strategies. Techniques like gradient clipping help prevent exploding gradients, a common issue in LSTMs. Many PDF guides and tutorials demonstrate how to implement and optimize LSTMs within PyTorch, detailing best practices for hyperparameter tuning and regularization.

Advanced techniques, including meta-optimizers, dynamically adjust learning rates and other optimization parameters, potentially leading to faster convergence and improved model performance. Resources highlight practical applications and code examples for these methods, enhancing model training efficiency.

PyTorch Meta-Optimizers

Meta-optimizers represent a sophisticated approach to machine learning optimization, dynamically adjusting the learning process for improved efficiency and performance. Unlike traditional optimizers like SGD or Adam, meta-optimizers learn how to optimize, adapting to the specific characteristics of the model and dataset.

PyTorch provides a flexible environment for implementing and experimenting with various meta-optimization algorithms. Libraries like ikostrikov/pytorch-meta-optimizer offer pre-built implementations, simplifying integration into existing workflows. PDF resources and tutorials detail the theoretical foundations and practical applications of these techniques.

Benefits include faster convergence, improved generalization, and reduced sensitivity to hyperparameter tuning. However, meta-optimizers often introduce increased computational complexity, requiring careful consideration of resource constraints. Exploring these advanced techniques can unlock significant performance gains.

Troubleshooting Common Issues

Installation problems with PyTorch, especially GPU versions via pip, are frequent. PDF guides and CSDN Q&A offer solutions for compatibility and driver conflicts.

PyTorch Installation Problems

Encountering difficulties during PyTorch installation is common, particularly concerning CUDA compatibility and environment setup. Many users report issues with pip, often stemming from incorrect CUDA versions or driver conflicts. Online resources, including detailed PDF guides and platforms like CSDN Q&A, provide troubleshooting steps.

Specifically, ensure your NVIDIA drivers are up-to-date and compatible with the PyTorch version you intend to install. Selecting the correct PyTorch build—CPU-only or GPU-enabled—is crucial. When using pip, verify that the CUDA toolkit is properly installed and accessible within your environment.

Furthermore, consider creating a dedicated virtual environment to isolate PyTorch dependencies and avoid conflicts with other packages. If problems persist, consult the official PyTorch documentation and community forums for specific error messages and potential solutions. Remember to check for low-version PyTorch/CUDA requirements for specific projects.

GPU Version Installation with pip

Installing the GPU-enabled version of PyTorch with pip requires careful attention to CUDA compatibility. Begin by verifying your NVIDIA GPU and driver versions, ensuring they meet the minimum requirements specified in the PyTorch documentation. Utilize the PyTorch website’s installation guide to generate the appropriate pip command, selecting your CUDA version.

Execute the generated command within a virtual environment to isolate dependencies. Common issues arise from mismatched CUDA versions; ensure the toolkit installed matches the PyTorch build. Resources like CSDN Q&A often address specific error messages encountered during installation.

Post-installation, verify the GPU is recognized by PyTorch using a simple tensor operation. If errors persist, double-check driver installations and CUDA paths. PDF guides detailing the process can be invaluable for navigating potential complications.